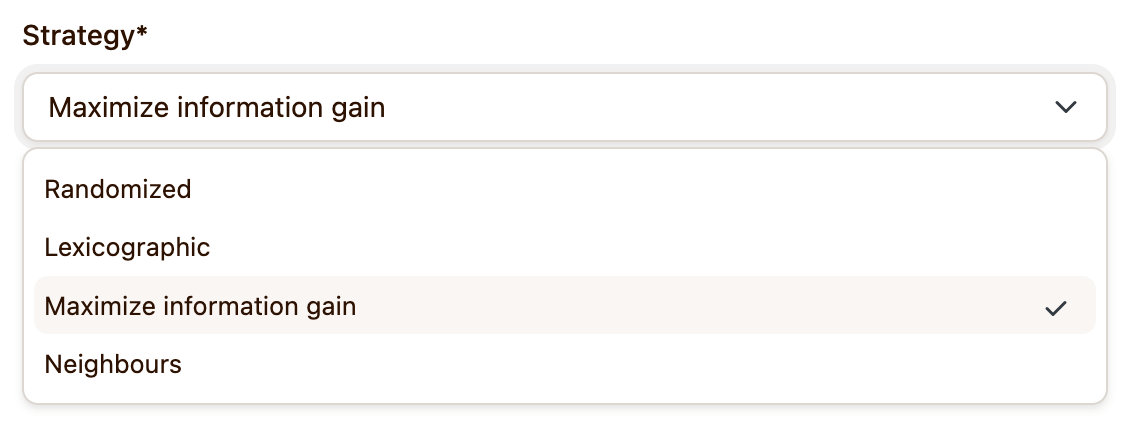

Sampling strategies control which stimuli to evaluate next. Our experiments support randomized, lexicographic, and active sampling strategies. These can be configured when creating a job:

Active sampling strategies try to select sets of stimuli whose evaluation will be most informative. This can significantly reduce the number comparisons needed for robust results. Several papers have explored active selection strategies in the context of pairwise comparisons [e.g., 1, 2, 3].

Datasets on Mabyduck are a collection of folders corresponding to different sources or contexts, and media files within a folder correspond to different conditions applied to the same source. Our strategies decide how to sample folders as well as how to sample conditions within folders.

Each job maintains its own state for the purpose of active selection. That is, when creating multiple jobs for one experiment, each job will sample stimuli independently.

Our randomized sampling strategy randomizes the order in which folders and conditions are selected, but otherwise tries to sample stimuli as evenly as possible. For each session, folders are shuffled once and then sampled deterministically in round-robin fashion. When there are at least four different conditions in the dataset, conditions are sampled at random without replacement from a list of conditions. When not enough conditions remain to fill a slate, a new list is formed and used to fill the slate. This approach ensures that each condition is sampled with equal frequency while not creating a predictable order. If the number of conditions is three or less, conditions are sampled with replacement.

This strategy samples folders in lexicographic order. This gives you precise control over the order in which stimuli are presented. Within each folder, conditions are sampled randomly following the same strategy as for the randomized strategy.

If you also wish to control the conditions that are presented, you can do so by limiting the number of conditions per folder to the number of stimluli per slate. For example, for a single-stimulus ACR experiment, each folder would have a single media file.

Let represent the performance of condition , and represent the performance of another condition . and represent the true underlying performance of the methods, which is unknown. That is, they are real-valued random variables.

Typically, the goal of an experiment is to learn the extent to which is true. There may be many conditions in our experiment. For some pairs, we may already have a good idea about their relative performance, while for others we are still uncertain. We want to focus our data collection efforts on pairs where additional data will be the most impactful.

Let denote the outcome of a pairwise comparison between conditions and . That is, represents the decision by a rater. Further, let be the data already collected. The information maximization strategy tries to select conditions and so as to maximize the following mutual information:

We use mean-field variational inference to approximate the posterior distribution over scores and using Gamma distributions. This makes it fairly straightforward to compute or estimate the entropies on the right-hand side.

As a guardrail and for practical reasons, we do not only consider the single most informative pair. Instead, we construct a list of pairs by considering every condition, and pairing it with the most informative other condition. We then randomly sample from this list of pairs.

This simple strategy orders conditions based on their current Elo scores, then lists all possible pairs of conditions with neighboring scores. Stimulus pairs are then sampled from this list uniformly at random.

[1] Chen et al. (2013). Pairwise ranking aggregation in a crowdsourced setting.

[2] Maystre and Grossglauser (2017). Just Sort It! A Simple and Effective Approach to Active Preference Learning.

[3] Mikhailiuk et al. (2020). ASAP: Active sampling for pairwise comparisons via approximate message passing and information gain maximization.